Listen to Steve read this post below (Bonus garden bird noises in the background!)

—

Steal Things.

No, I’m not being flippant — that’s how most of the insanely large fortunes have been built through history. By theft I don’t mean breaking into a vault or armed robbery — it’s far more subtle than that… But hear me when I say that stealing is, without question, the most profitable business model in history.

So, what do I mean by stealing?

I mean — taking things which aren’t yours, or anyone’s for that matter. Think appropriation, extraction, control, or the co-opting of someone else’s labor, property, idea or data. If you doubt it, just look at every empire that’s ever existed — both nation state and corporate.

The trick? Extract it before the ‘victims’ realise its value.

From the dawn of commerce, to the dawn of AI, every major fortune has been built on the same foundation: taking something that wasn’t yours, fencing it off, and pretending you created what’s inside the new boundary. Oh, and then charge people for access to it.

Imperialists did it first.

They turned land, minerals, spices and sadly, even people into asset classes. They drew lines on maps and said, this is ours now. They imposed their arrival through the barrel of a gun, military might over less developed, new found colonies.

Then came the industrialists.

The oil oligarchs, the mining magnates, the robber barons. They didn’t invent oil, they dug it up and declared ownership. Whoever gets there first, gets rich — everyone else pays rent forever.

Then came the factories. Cars, steel, rail, assembly lines. They didn’t invent human effort, they just systemised and re-packaged it. They stole independence from the farm and the craftsmen and sold it back as wages. They stole time itself — hours punched on a clock — and offered weekends as consolation.

And here’s the kicker: every new technology creates a new thing to steal.

Data Was the New Oil

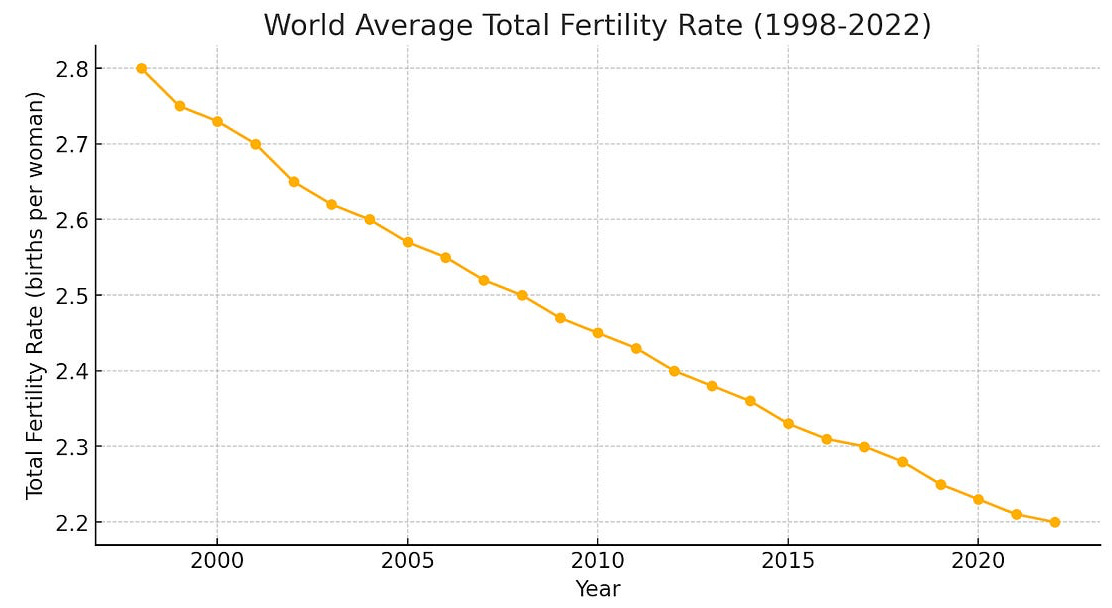

Fast forward to the digital era. The oil of our time became data. In 1995 the biggest seven companies in the world were in the energy business. Today the biggest seven companies mine attention. Both cohorts are in the business of extraction.

Big Tech figured out that the most valuable resource on Earth wasn’t underground — it was inside us. Our clicks, likes, faces, voices, connections, our thoughts… our waking hours. (Side note: Netflix CEO Reed Hastings once quipped that their biggest competitor was sleep.)

They harvested it, repackaged it, and sold it back to us as “personalised experiences.”

Facebook didn’t ask for your data. They just took it.

YouTube didn’t license music. They stole it first, scaled the audience, got huge venture funding and then cut deals, with their newfound financial power backed by Google.

Uber didn’t disrupt taxis — it ignored the value of taxi licences that drivers had paid hundreds of thousands for. They broke the law until they got the law changed — assisted by their $13.2 billion in venture funding and political donations.

It’s the same playbook over and over again: move fast, fill the zone, make the theft irreversible.

… Then AI arrived & Stole at Scale

OpenAI’s ChatGPT was trained on the entire internet — every book, every blog post, every picture and video they could scrape. Whole careers, libraries of art, lifetimes of writing and creative output — downloaded without permission. My three books are even in there.

Early outputs even carried Getty Images’ watermarks, proving where the training data came from. The New York Times is suing them for exactly this — ChatGPT could reproduce entire paywalled articles word for word. Yep, content wasn’t just learned, it was ‘lifted’.

When a human copies your work, we call it plagiarism. When an AI does it at planetary scale, we call it progress.

Enter Sora 2 —

The AI grift is continuing…

OpenAI just released Sora 2, a text-to-video model that can generate astonishingly realistic clips. People, places, characters, especially copyrighted ones… all of it, drawn from the vast ocean of human creativity that was scraped from the web without permission.

And you guessed it, they made it opt-out. Meaning your art, your likeness, your face, your voice can be used unless you explicitly tell them not to. Imagine that was a shop: “Hey, we are stealing your merchandise unless you tell us not to.” You can’t make this stuff up.

That’s not consent — that’s corporate gaslighting.

We shouldn’t have to fight for what’s already ours.

For the record: Sora 2’s creators now promise better control for copyright holders — watermarks, provenance tags, and verification systems. But that’s not protection, that’s paperwork. They already filled the zone.

The Flood the Zone Strategy

The most effective form of theft isn’t just sneaky. It’s overwhelming — on purpose.

If you flood the system fast enough, by the time the lawyers arrive, the world has already changed. Ask Uber. Ask YouTube. Ask Meta.

In economics, whoever defines the default wins. Opt-out means “you’ve already lost, you just don’t know it yet.”

The pattern is simple:

- Break the rules.

- Get rich doing it.

- Pay fines later — it’s cheaper than permission.

That’s what’s happening with generative AI. It’s flooding the cultural zone — scraping everything we’ve made to train machines that will eventually out-compete the very humans they learned from. Then once the economic machine embraces its power, regulators simply side with the money. They always do.

And we’re letting it happen because we’re too busy marvelling at the magic trick to notice our pockets are being picked.

I Vote “Yes” for AI, but…

Now for the ironic part. I believe all of these technologies (Facebook is borderline) have been a net good for humanity. They’ve improved living standards beyond human comprehension, and yes they’ve caused a lot of pain along the way. But life expectancy and living standards have increased tremendously with every technology revolution. The bit we need to fix, is the chicanery part. The part where regulators get stooged into letting ‘innovators’ define the rules of a new era. Where in reality we only need to make one small change:

Make sure innovators pay for their raw materials.

This simple shift — one single change — would allow us to benefit from the technology, without the largess going to a fortunate few who got there first, and allow humanity to benefit at scale through usage and the economic upside

The way we make it change is simple too: we need to have conversations about it. So be sure to share this with an astute friend. Thanks for reading.

Keep thinking,

Steve.

** Get me into do an AI keynote at your next event. I’ll use this as my testimonial!